Technology is what drives Functional Genomics, it allows us to as new question int the genome level. It is possible because technology allows us to ask questions in parallel, through high throughput technology, giving more power to infer new insights.

Different risk and benefits conditions are in each stage of technology life cycle:

While a technology still in the research and development stage, it really depends on external funding to survive. It could rise from industry or academia. But investing in this stage have high risk but also promises high benefit if the technology success. Because, to adopt in this stage means that you have to invest in order to gain early access to the technology. But, because the technology is new, analysis process hasn't been developed. This gives a really challenging opportunity to solve problems which other have not been able to answer and developed analysis methods before competition. It really risky because it may fail and disappear because nobody is interested.

Well, you still have the opportunity to became an early adopter, but the technology is more available and analysis processes are rapidly maturing. The risk become lower because the technology is less likely to fail because it has passed the R & D phase.

Risk are low because the technology is widely available, methods are well established, and commercial developer are stable because they have good income. Many problems have been addressed both in terms of technology development and biological application.

Generally it is a bad idea because the access to technology might be lost any time. Most problems should be already answered, or probably better alternatives has been developed. Technology development and expertise is declining and makes the value of the technology become lower.

Current Status of FGT

Expression Microarray, SNP Microarray, are in the mature phase but not yet in decline, although its slowing. Standard RNA-seq and ChIP-seq are in ascent towards mature. Mass spectometry are coming out of R & D phase and into ascent. ChIP-on-chip, Roche454, Helicos, and SOLiD are in decline and some are discontinued.

Emerging Technology

1. High-Throughput Platform: L-1000

Microarray profiling costs a lot of money which limits the amount of samples and replicates. Even though its cheaper than RNA-seq, but it is still a constraint. Learning from microarray platform, basically not all data are changed accross the dataset, we have already identified gene cluster that changes. This means that we don't have to measure all the genes, but we can measure one genes as a proxy for the representative geneset.

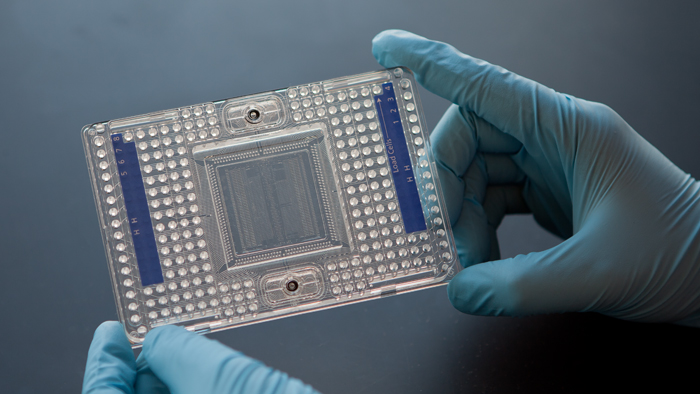

The L-1000 is a bead based technology, it is based on microarrays, but in this case each bead encapsulates nucleotides for running the test. What awesome is that the technology runs on plates (NOT microarrays), which means that they can run in mass and makes them very inexpensive. This high-throughput approach is very valuable for pharmaceutical companies who want to compare all the drugs in their drug collection and work out relationships among these drugs. This is actually an old idea the idea of scaling up and running in parallel is new. Data processing of the platform is very similar to microarray: normalisation, gene expression, clustering. It breaks the record on GEO, it has already submitted lots of data (almost as much data as the entire collection of all the world's record on human profiling in the last ten year) in a very short time (only appeared in a few experiments)!

2. New ChIP-seq & Related Technologies

This new technology are developed to probe the epigenome. Two of this technology are:

a. ChIP-Exonuclease

ChIP-exo overcome the problem found in ChIP-seq where the sequence binding in the TF can only be localised within the purified fragment. In ChIP-seq, antibody will pulls out the protein of interest, purify it, sequence, and analysed to identify the binding peak, then determine exomer motif. The resolution of the technology is relatively small, it cannot tell where the protein exactly binds if one has two binding sites in close proximity. The TF sites is only a few bp, but the fragment are typically few hundreds bp. ChIP-Exo address the problem by using single-stranded exonuclease digestion of ChIP fragments and effectively localises the exact site of TF binding. The exonuclease runs from 5' to 3' and chops DNA until region to which TF is cross-linked and protects DNA.

b. Conformation Capture Technologies

Conformation capture can analyze folded structure in the chromosome. This 3D structure is hard to measure. The technology captures the spatial connection between bits of chromatin in the genome, ligate and capture, then sequence them. This technology makes an artificial joint between bits of material that are connected and joined together by a common complex.

The technology address the N^2 problem of the genome. This problem means, if any point in the genome can potentially connect to any point in the genome, we will have to do N^2 amount of sequencing to visualize each connection. This is too much sequencing to be done! So, how do we reduce the complexity? If we put in only known regulation sequences, can we see how they connect to each other? If one knows these, one can infer the 3D structure of the genome, which is only 1% of the genome, and its possible to sequence them at a reasonable resolution. In essence, the technology focuses on regulatory regions to captures 3D structure of the genome.

3. Single Cell Technologies

Right now, we are very interested to analyze a single cell. Why? Because, all the technology above utilises a mixture of cell as a weighted average as sample. This means, one contamination of cell type could change transcription profile and leads to false conclusion. Therfore, by profiling one cell, we can really measure transcript change in a single cell.

But, single cell sequencing are still limited by the affinity of reverse transcriptase for it's template and suffer from amplification biases. There is only 10^5 - 10^6 molecules per cell expressing 10k genes. This small amount need to be amplified.

Microfluidics allows for the separation of individual cells into wells and process them, one cell at a time, take RNA from single cells, generate probes, then allow reaction for sequencing to be carried in parallel using only tiny amount of volumes. This is ideal for processing a small amount of RNA from 1 or few cell. Microfluidics allow single cell amplification efficiency to be increased. But then, the data is very challenging because only few/tiny amount of transcripts per cell, and the technology still struggles to get representative amplification (rare transcripts are never enough amplified).

Single cell technologu allow us to make new discoveries about cells, with very high resolution. This could reveal a new era in biology.

For example, the analysis of 'transcription bursting' of single cells which go through cell cycles. In this case, the genes involved in cell cycle became very active and then inactive again. This made statistical analysis to judge for differentially expressed genes, and it turned out to be the cell cycle related genes. Measuring this could make predictions and adjust to the cell's cycle for their measurements.

To control the quality of the platform, spike-in controls (RNAs with known concentrations) can be used to measure quality of amplification. Another approach is to remove amplification bias by counting unique molecules instead of reads. What it means is, how many molecules were in the starting population that was amplified (it represents molecules in that cell)? This perspective gets rid amplification bias. For example, molecules started at the same ratio, one has X and other has 2X. This could have distortion because one amplifies more than another, it collapse down to measure individual molecules in individal cells within individual reactions. The strategy above remove amplification bias by generating a barcode for individual reverse transcription that generates an individual molecule in a ind. rxn from an ind. cell→ can get barcodes and deconvolve information during analysis. single cell has a 90.9% correlation to the averaged, mixed cell population

Drop-seq

- problem: have to sort cells by microfluidic system with flow chambers, expensive, difficult to do

- flow chamber where cells are capture in individual, barcoded beads→ rxn takes place where reverse transcription synthesis is carried out + amplification inside the droplets

- have individual cell and chamber with barcoded oligonucleotides, join those and do synthesise in the droplet→ then, break up and sequence a lot→ know which cell came from which droplet because of barcode

- can generate a transcriptional profile for many individual cells in parallel

Spatial, in situ hybridisation

- break up cell, do in situ hybridisation on individual components inside 'dots' representing individual cells→ can spatially resolve what cells are next to each other on a dish and predict gene expression

Conclusion

Rapid progress are being made in single cell amplification. Best approaches for this is the composite of available technology: RNA-seq, microfluidics, novel cDNA labelling, etc. Future technology may be a multiomics approach (RNA, DNA, Chromatin, Protein). We already have a technology for measuring the accessibility of the chromatin. So can we then ask which region are accessible for TFs? Can we measure single-cell methylation? The future technology will enables us to ask integrated question on single cells.